Cheat sheet on Neural Networks 1¶

The following article is a cheat sheet on neural networks. My sources are based on the following course and article:

- the excellent Machine Learning course on Coursera from Professor Andrew Ng, Stanford,

- the very good article from Michael Nielsen, explaining the backpropagation algorithm.

Why the neural networks are powerful ?¶

It is proven mathematically that:

Suppose we’re given a [continuous] function f(x) which we’d like to compute to within some desired accuracy ϵ>0. The guarantee is that by using enough hidden neurons we can always find a neural network whose output g(x) satisfies: |g(x)−f(x)|<ϵ, for all inputs x.

Michael Nielsen — From the following article

Conventions¶

Let’s define a neural network with the following convention:

L = total number of layers in the network.

sl = number of units (not counting bias unit) in layer l.

K = number of units in output layer ( = sL ).

With:

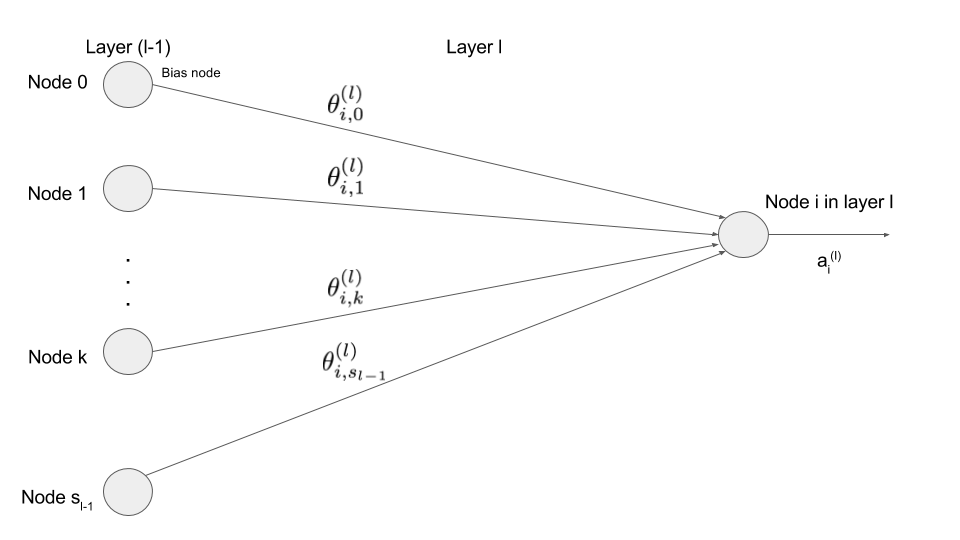

∀l∈ 2,...,L−1, a(l)∈Rsl+1and {a(l)0 = 1 (bias node)a(l)i=g(z(l)i),∀i∈1,...,slz(l)i=[θ(l)i]T.a(l−1),∀i∈1,...,slwith θ(l)i∈Rsl−1+1 and z(l)∈RslWe define the matrix θ of the weights for the layer l as following:

θ(l)∈Rsl×(s(l−1)+1)Hence, we have the following relation: a(l)=(1g(θ(l).a(l−1)))

The cost function of a Neural Network¶

The training set is defined by: (x1,y1),...,(xm,ym)

x and y are vectors, with respectively the same dimensions as the input and output layers of the neural network.

The cost function of a neural network is the following:

J(θ)=−1mm∑i=1K∑k=1[cost(a(L)k,y(i)k)]+λ2mL∑l=2sl∑j=1sl+1∑i=1(θ(l)i,j)2a(L)k is the output of the neural network, and is dependent of the weights 𝜃 of the neural network.

Please note that the regularization term does not include the weights of the bias nodes.

Now, the objective is to train the neural network and find the minimum of the cost function J(𝜃).

Mathematic reminder: the chain rule¶

Let’s define the functions f, g and h as following:

f:Rn→RThe derivative of h is given by the chain rule theorem:

∀i∈{1,...,p},∂h∂xi=n∑k=1∂f∂gk∂gk∂xi(See the following course online on partial derivation from the MIT)

The backpropagation algorithm¶

We use the gradient descent to find the minimum of J on 𝜃: minθJ(θ)

The gradient descent requires to compute:

∂J(θ)∂θ(l)i,jIn the following parts, we consider only the first part of J(θ) (as if the regularisation term λ=0). The partial derivative of the second term of J(θ) is easy to compute.

The following course from Andrej Karpathy gives an outstanding explaination how the partial derivatives works at the level of a node in the backpropagation algorithm.

Definition of ẟ¶

Let’s define the function ẟ. When ẟ of the layer l is multiplied by the output of the layer (l-1), we obtain the partial derivative of the cost function on θ.

Let’s use the chain rule and develop this derivative on z:

∂J(θ)∂θ(l)i,j=sl∑k=1∂J(θ)∂z(l)k∂z(l)k∂θ(l)i,j(Remind that J is dependent of z)

As: z(l)k=[θ(l)k]T.a(l−1)=sl−1∑p=0θ(l)k,p×a(l−1)p

We define the output error 𝛿: δ(l)k=∂J(θ)∂z(l)k, ∀k∈1,...,sl, and δ(l)=∇z(l)J(θ)∈Rsl

So we have:

∂J(θ)∂θ(l)i,j=δ(l)i.a(l−1)j

More specifically, for the derivatives of the bias node's weights, we have (a(l)0=1, ∀l):

∂J(θ)∂θ(l)i,0=δ(l)iValue of ẟ for the layer L¶

Now let’s find 𝛿 for the output layer (layer L):

δLi=∂J(θ)∂z(L)i=sL∑k=1∂J(θ)∂a(L)k∂a(L)k∂z(L)iAs:

a(l)k=g(z(l)k), ∂a(L)k∂z(L)i=0 if k ≠ iHence:

δLi=∂J(θ)∂a(L)i∂g(z(L)i)∂z(L)i=∂J(θ)∂a(L)i.g′(z(L)i)

Relationship of ẟ between the layer l and the layer (l-1)¶

Now, we try to find a relation between 𝛿 of the layer l and 𝛿 of the next layer (l+1):

δ(l)i=∂J(θ)∂z(l)i=sl+1∑k=1∂J(θ)∂z(l+1)k∂z(l+1)k∂z(l)iWith:

z(l+1)k=[θ(l+1)k]T.g(z(l)p)=sl∑p=0θ(l+1)k,p×g(z(l)p)And:

∂z(l+1)k∂z(l)i=θ(l+1)k,i.g′(z(l)i) for p = i, else 0.Hence:

δ(l)i=sl+1∑k=1δ(l+1)k.θ(l+1)k,i.g′(z(l)i)

The meaning of this equation is the following:

The backpropagation algorithm explained¶

We have the following:

- we have found a function ẟ for the layer l such that when we multiply this function by the output of the layer (l-1), we obtain the partial derivative of the cost function J on the weights θ of the layer l,

- the function ẟ for the layer l has a relation with ẟ of the layer (l+1),

- as we have a training set, we can compute the values of ẟ for the layer L.

So, we start to compute the values of ẟ for the layer L. As we have a relation between ẟ for the layer l and ẟ for the layer (l+1), we can compute the values for the layers (L-1), (L-2), …, 2.

We can then compute the partial derivative of the cost function J on the weights θ of the layer l, by multiplying ẟ for the layer l by the output of the layer (l-1).

The vectorized backpropagation’s equations¶

The first equation gives the partial derivatives of J with respect to θ. We have added the regularization term.

∇θ(l)J(θ)=[∂J∂θ(l)1,0…∂J∂θ(l)1,j…∂J∂θ(l)1,sl−1⋮⋮⋮∂J∂θ(l)i,0…∂J∂θ(l)i,j…∂J∂θ(l)i,sl−1⋮⋮⋮∂J∂θ(l)sl,0…∂J∂θ(l)sl,j…∂J∂θ(l)sl,sl−1]=δ(l)⊗[a(l−1)]T+λmθ(l)zero biasWith θ(l)zero bias is θ(l) with θ(l)i,0 = 0, ∀i∈1,...,sl (the regularization term does not include the bias node's weights).

The second equation gives the relation between ẟ in layer l and ẟ in layer (l+1):

δ(l)=[(θ(l+1)remove bias)T.δ(l+1)]⊙g′(zl)With θ(l)remove bias is θ(l) with the column of the bias nodes' weights removed.

The third equation gives the value of ẟ for the layer L:

δ(L)=∇a(L)J(θ)⊙g′(zL)Conclusion¶

This cheat sheet explains the backpropagation algorithm used to train a neural network. I have created this article after following the great Machine Learning’s course of Professor Andrew Ng on Coursera. The conventions used in this article are not exactly the ones used in the course of Professor Ng, nor exactly the ones used in the article of Michael Nielsen.

If you notice any error, please do not hesitate to contact me.